Terraform GitHub Action workflows

With HashiConf'25 a few weeks away, I thought I would bring back some of the hallway track slides I had on “GitHub based Terraform workflow deployments” from HashiConf'24, most of which I had to create when working with some customers. These are some sample workflow patterns which you can possibly use or tweak as needed for your organization needs. I am using GitHub actions as the CI system as it is easy for anyone reading to reproduce across their personal repositories.

Table of Contents

Introduction

This post covers practical Terraform deployment patterns using GitHub Actions that I’ve encountered while working with customers. You’ll learn about different workflow scenarios including baseline deployments, feature flag implementations, cleanup strategies, and deployment to regulated environments. Each pattern addresses specific organizational needs and constraints, from simple branch-based deployments to complex multi-environment feature flagging.

Components

- GitHub Actions workflows

- HCP Terraform workspaces

- Terraform configuration is present in a GitHub repo

- AWS Credentials accessible to GitHub actions via OIDC OR Dynamic Credentials which HCP Terraform can retrieve from AWS using OIDC. Either one is preferable over static credentials.

Workflow scenarios

Foundational workflows

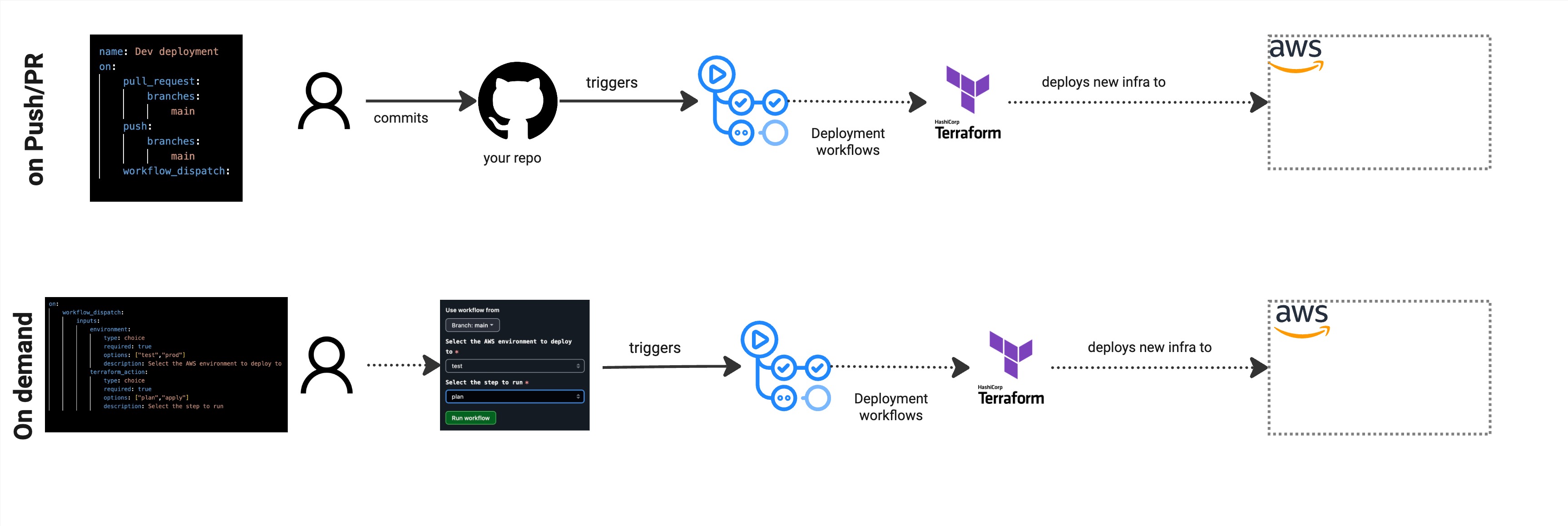

GitHub action allows you to set triggers based on a few conditions. Naming a few below.

- Commit to a branch

- On demand : Select the workflow to be triggered from Actions tab in any repo.

- On a schedule

-

The top portion of the image shows a commit triggering a Terraform deployment workflow which in this case provisions your infrastructure to AWS through HCP Terraform.

-

The bottom portion shows an “on demand” workflow with manual triggers users can invoke. As a workflow owner, you can provide users with choices for target environment, run type (plan only or apply), and other relevant options. I use environment and type parameters to let users run speculative plans against higher environments like pre-prod or prod after changes are deployed to dev. This approach gives users clear visibility into what changes will occur based on the new configuration versus what’s currently provisioned in the target account.

Custom Workflows

Branch-based Deployment Patterns

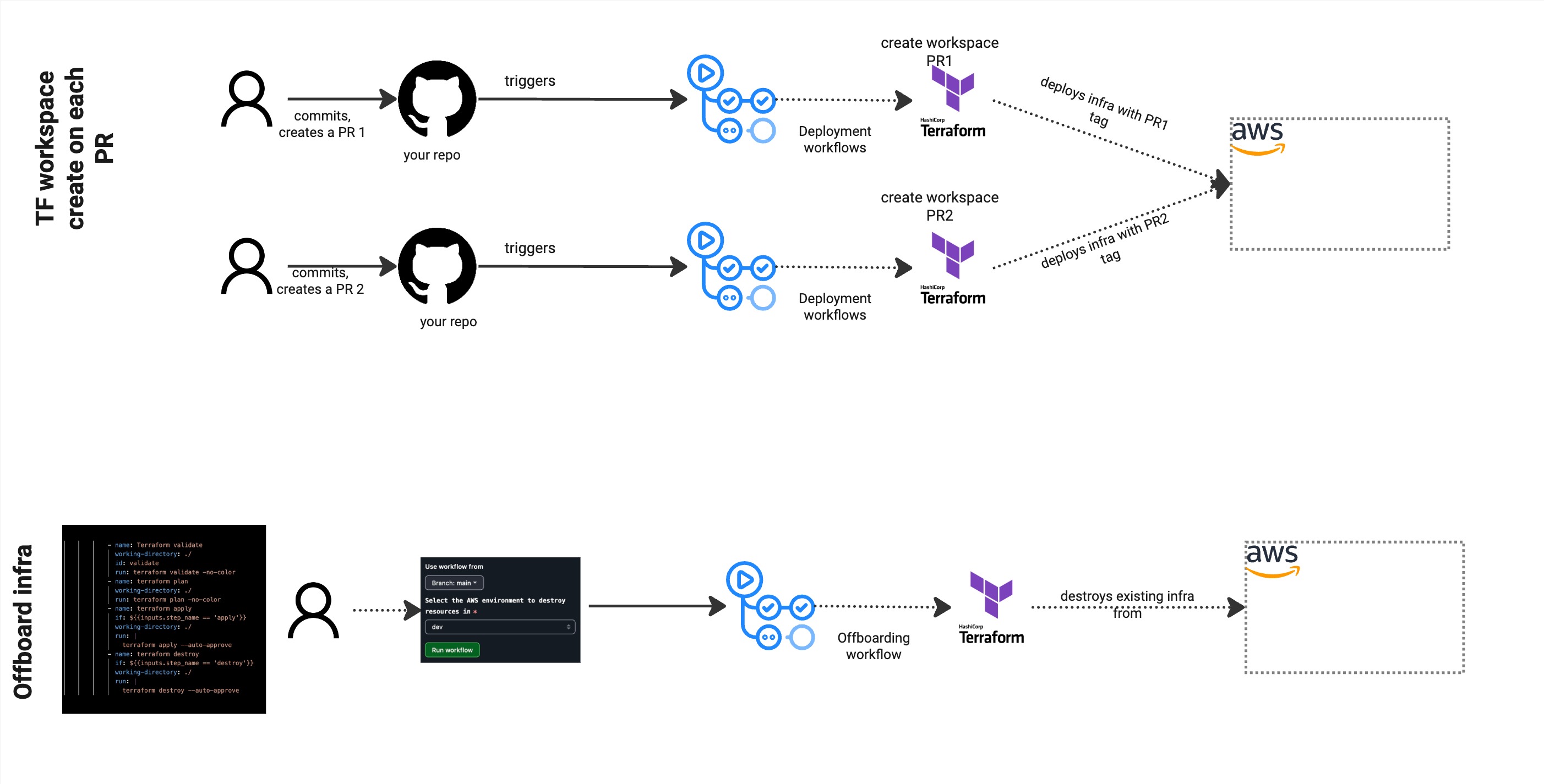

As I work with customers, their demand for types of workflows and deployment patterns differ. I have customers who prefer to use PRs to deploy to a single dev/sandbox account followed by a main branch based reconciliation. There are others who have the luxury to provision multiple instances of workloads into a single account or multiple accounts based on the PR source branch. In those latter scenarios, you could have prefixes and tags for your resources which make them different for each branch being provisioned to an account.

The state management and use of proper naming standards become crucial in this case. Keep in mind that there are elevated costs associated with this flow. I usually recommend a stale PR workflow which removes the infrastructure based on some external trigger or a manual clean up workflow in case a PR is stuck or blocked. Else each PR is provisioning their own instance of the resource. You may need to have some additional logic around merge to clean up any infrastructure that is provisioned using the PR based approached. That brings us to the next set : clean up workflows.

Cleanup Workflows

This is a mechanism to allow the users or application owners in a repo run a terraform destroy on their infrastructure in a certain account. This could be due to multiple reasons; move to a new account, app being decommissioned and the above describe branch based PR workflow when merged to a default branch.

Feature flag workflow

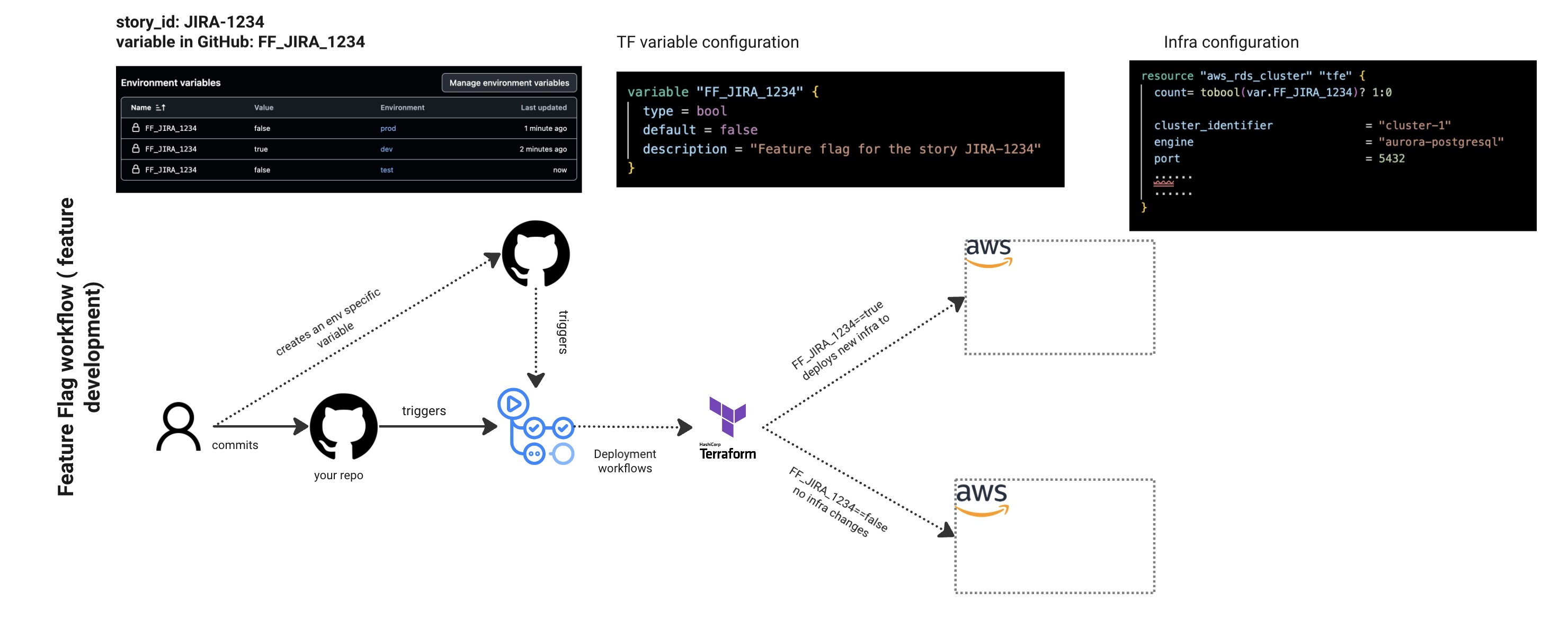

Feature flags are a common pattern in software delivery that separate deployment from release. I proposed this approach to a team lacking dedicated feature flagging tools like LaunchDarkly. While challenging to implement with infrastructure code, it provides valuable deployment control.

Situation: Teams following Trunk Based development with some feature which was merged in, but not ready to be consumed by users.

Proposal: Use a combination of GitHub environment variables along with the count meta argument in Terraform.

- Set the count parameter on infrastructure resources you’re not ready to deploy to production yet. The count uses an environment variable with true/false values to conditionally create those components.

- I use the Jira story ID as the environment variable name - this makes it easy to trace back to the original feature request.

- Create the same environment variable across all your target environments (dev/preprod/prod), but only set dev to true initially.

- When the Terraform workflow runs, your component gets deployed to dev while staying disabled in higher environments.

The picture shows:

- A complete feature flag workflow using story ID JIRA-1234

- Demonstrates how environment variables in GitHub (FF_JIRA_1234) map to Terraform variables and infrastructure configuration

- Flow: Developer commits → GitHub triggers deployment workflows → Terraform provisions AWS RDS cluster with feature flag controls

- Shows the Terraform configuration using count = tobool(var.FF_JIRA_1234) ? 1:0 to conditionally create resources

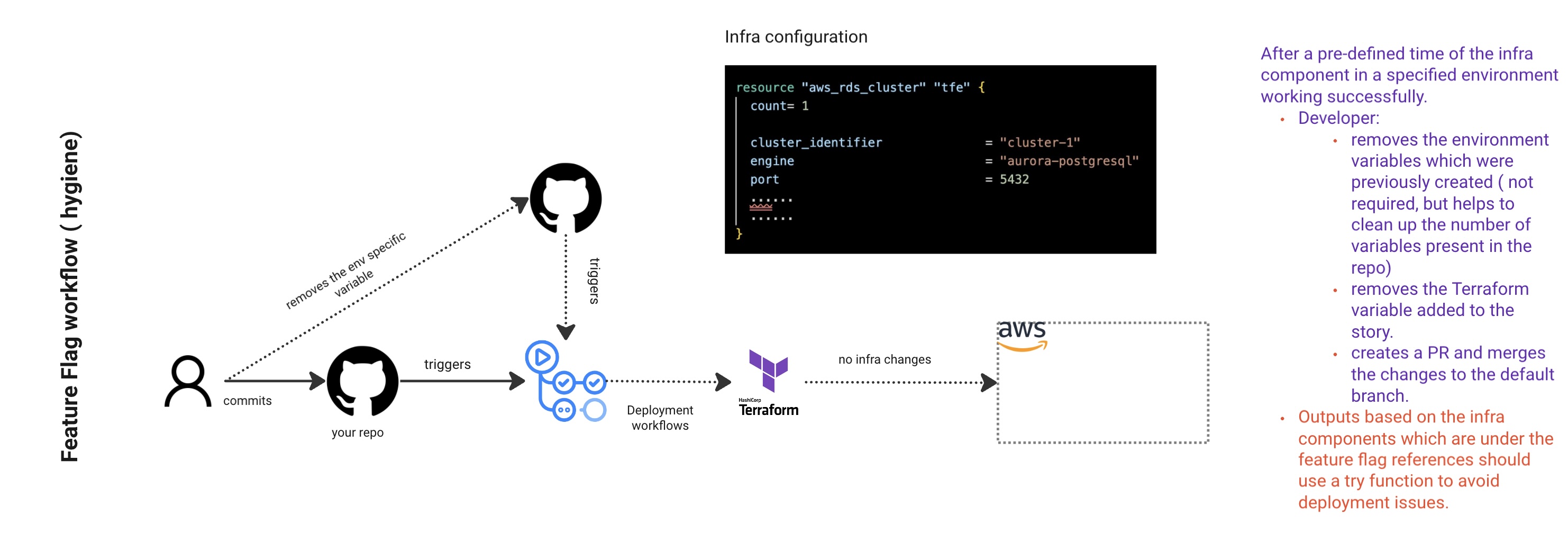

Feature flag hygiene

Every feature flag implementation needs a cleanup strategy. When adding feature flags, I create a corresponding cleanup PR as part of the same story for future removal. The cleanup process depends on your team’s definition of “done.” For example, after a month of the infrastructure component running successfully in production, you might decide it’s permanent and remove the feature flag.

The picture:

- Shows the cleanup process after feature development is complete

- Developer removes environment variables and Terraform variables from the story

- Creates a PR to merge changes to default branch

- Highlights that infrastructure components under feature flag references should use try functions to avoid deployment issues

- Shows “no infra changes” when the feature flag is removed

Keep in mind that I am leaving the count argument as 1 going forward, it would otherwise create a drift if you remove the count meta argument from the resource. You could use config driven import to avoid that if needed.

Deployment to Regulated environments

Situation:

I worked with a team that needed to provision Kafka topics, service accounts, and access controls in a private Confluent Kafka cluster for their streaming workloads. However, networking restrictions blocked their GitHub Actions and Terraform workflows from reaching the cluster. Their existing manual process required coordinating complex firewall rules with the networking team and managing IP-based access controls from Confluent accounts - all to support Terraform Enterprise deployments across 200+ customer repositories. You might face similar challenges when deploying to on-premises or regulated environments where Terraform Cloud can’t establish direct connections.

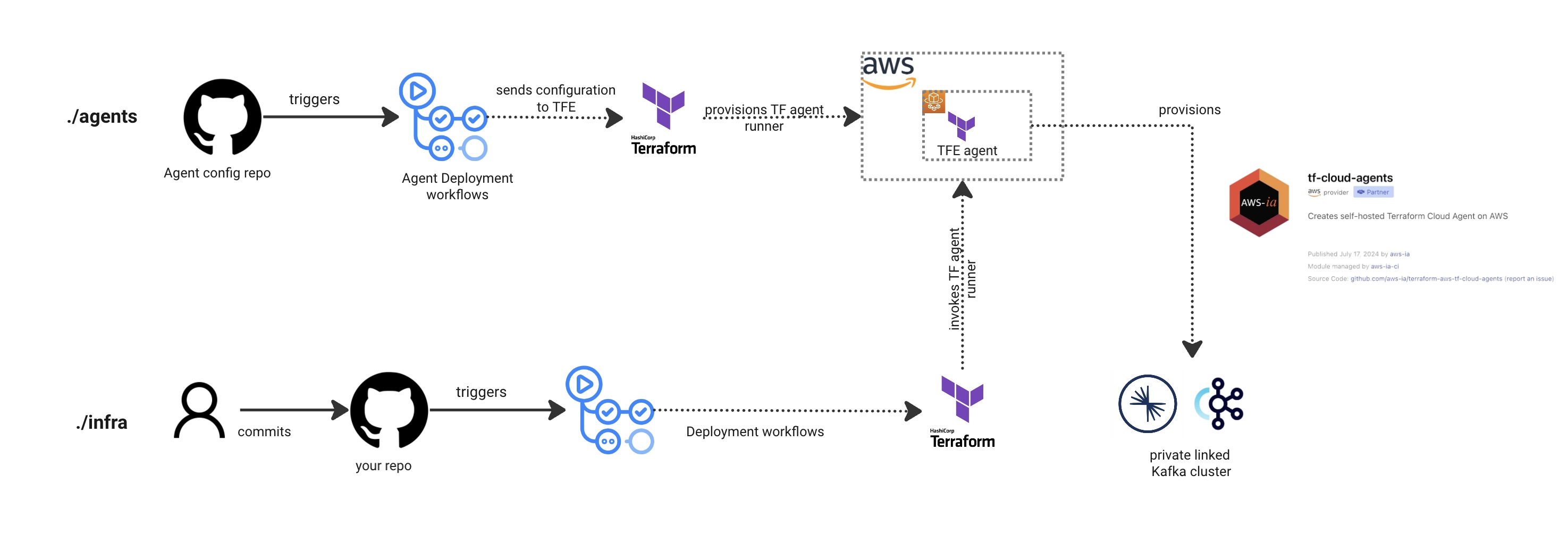

The picture shows two workflows:

-

Set up a Terraform Cloud agent that acts as a bridge to your regulated environment. For this team, I provisioned the agents on a Fargate cluster within the same VPC that had private connectivity to their Confluent cluster. The setup involves creating an agent pool in HCP Terraform (or TFE), which generates an API token for secure communication between the agent and the host. You then configure your Terraform workspace execution settings to use the agent instead of the default HCP Terraform runners.

-

The user workflow remains unchanged from their perspective. Behind the scenes, HCP Terraform (or TFE) delegates the actual Terraform execution to the agent running on the Fargate cluster, which has the network access needed to provision resources on the private Confluent cluster.

- Reference implementation : https://github.com/aws-ia/terraform-aws-tf-cloud-agents

Conclusion

These workflows represent patterns I’ve encountered and implemented in real customer scenarios. You’ll likely need to adapt them for your specific environment and requirements. Are there any other use cases you have come across which deviates from the standard GitHub action based workflows for Terraform ?

HashiConf ‘25 Session: I’ll be speaking at HashiConf this year on the Cloud engineering track about Making AI work for you: Terraform engineer blueprint. The session covers proven techniques for reliable infrastructure code using AI, including practical strategies like repository context, reusable prompts, MCP servers, and validation workflows.